This post has been a while in the making and follows up on an article about BGP communities that can be found here. Then we followed it up with some more discussion about FW design and place, or lack there of, on this podcast which inspired me to finish up “part 2”.

Anyone who has ever had to run active/active data centers and has come across this problem of how do I manage state?

You can ignore it and prepare yourself for a late night at the worst time.

Take everyone’s word that systems will never have to talk to the a system in a different security zone in the remote DC

Utilize communities and BGP policy to manage state; which we’ll focus on here

One of the biggest reasons we see for stretching a virtual routing and forwarding (vrf) is to move DC to DC flows of the same security zone below FWs. This reduces the load on the firewall and makes for easier rule management. However, it does introduce a state problem.

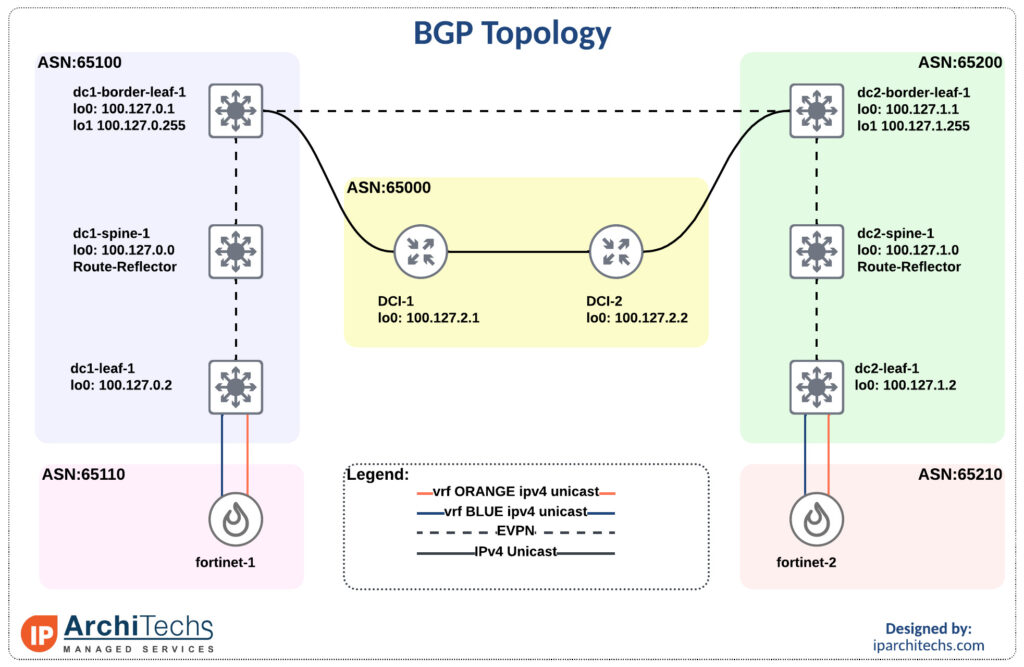

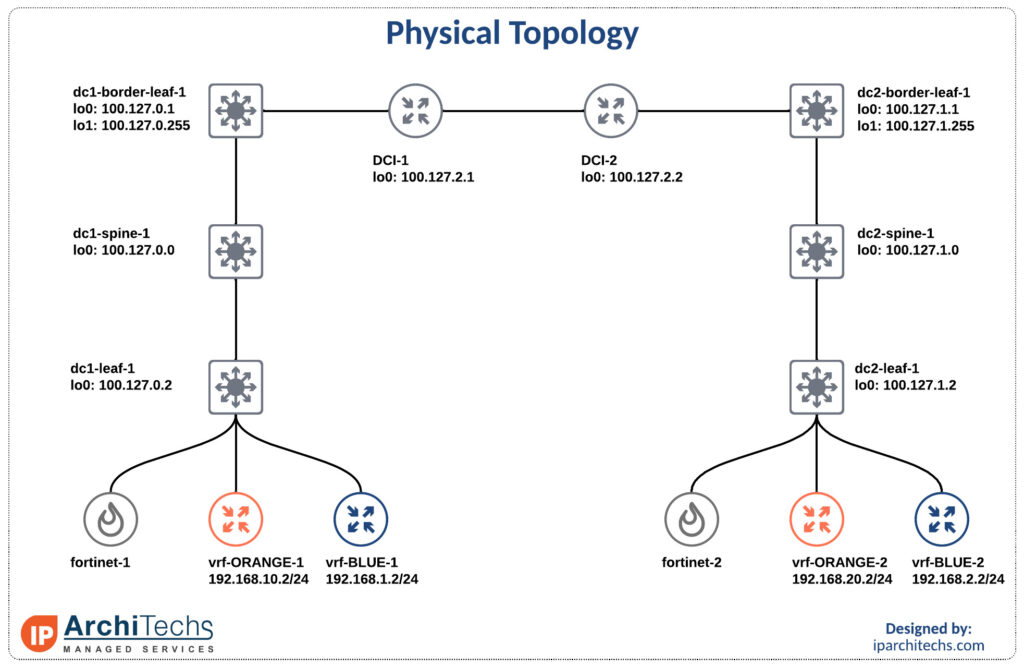

We’ll be using the smallest EVPN-multisite deployment you’ve ever seen with Nexus 9000v and Fortinet FWs.

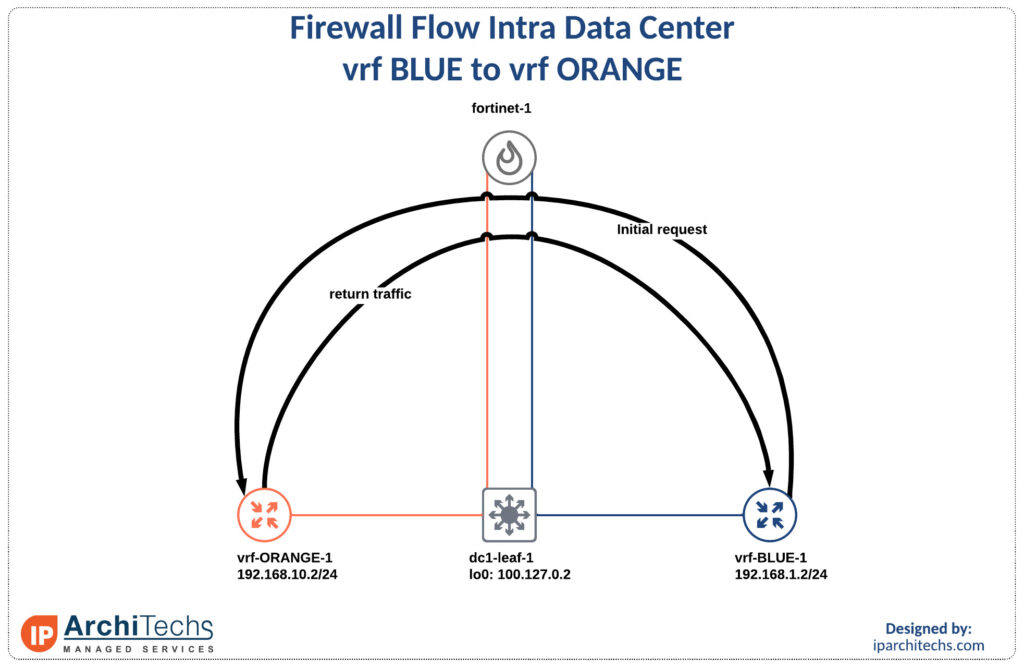

Inter vrf intra data center

The first flow we’ll look at it is transitioning vrfs in the same data center. In this example and all work going forward vrf Blue is allowed to initiate to vrf Orange. However, vrf Orange cannot initiate communication to vrf Blue.

Assuming your firewall rules are correct this “just works” and is no different than running your standard deployment.

vrf-BLUE-1#ping 192.168.10.2

Type escape sequence to abort.

Sending 5, 100-byte ICMP Echos to 192.168.10.2, timeout is 2 seconds:

!!!!!

Success rate is 100 percent (5/5), round-trip min/avg/max = 4/5/8 msinitial request

dc1-leaf-1# show ip route 192.168.10.0/24 vrf BLUE

IP Route Table for VRF "BLUE"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

192.168.10.0/24, ubest/mbest: 1/0

*via 172.16.0.1, [20/0], 17:29:08, bgp-65100, external, tag 65110Fortinet-1 routing table

return traffic

dc1-leaf-1# show ip route 192.168.1.0/24 vrf ORANGE

IP Route Table for VRF "ORANGE"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

192.168.1.0/24, ubest/mbest: 1/0

*via 172.16.0.5, [20/0], 17:30:21, bgp-65100, external, tag 65110Inter DC intra vrf flow

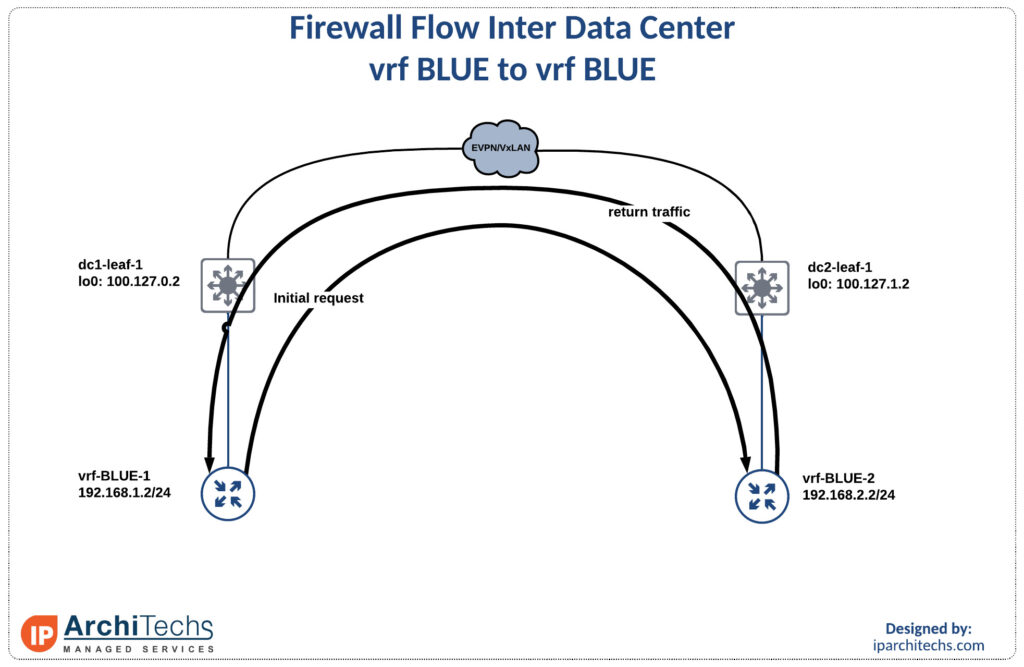

Here is the flow that normal starts this conversation. There is a desire to move same security zone flows and/or large traffic flows (replication) between DCs below FWs. This can reduce load on the FWs and make rulesets easier to manage since you don’t have to write a lot of exceptions for inbound flows on your untrusted interface.

vrf-BLUE-1#ping 192.168.2.2

Type escape sequence to abort.

Sending 5, 100-byte ICMP Echos to 192.168.2.2, timeout is 2 seconds:

!!!!!

Success rate is 100 percent (5/5), round-trip min/avg/max = 16/18/23 msInitial request

dc1-leaf-1# show ip route 192.168.2.0/24 vrf BLUE

IP Route Table for VRF "BLUE"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

192.168.2.0/24, ubest/mbest: 1/0

*via 100.127.0.255%default, [200/1], 19:24:36, bgp-65100, internal, tag 6520

0, segid: 3003000 tunnelid: 0x647f00ff encap: VXLANSince we utilized EVPN-Multisite to extend the vrfs between DCs (to be covered in a later blog) the first stop is the border gateway. This is abstracted on the flow diagram but can be seen on the original BGP layout.

dc1-border-leaf-1# show ip route 192.168.2.0/24 vrf BLUE

IP Route Table for VRF "BLUE"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

192.168.2.0/24, ubest/mbest: 1/0

*via 100.127.1.255%default, [20/1], 19:30:27, bgp-65100, external, tag 65200

, segid: 3003000 tunnelid: 0x647f01ff encap: VXLANdc2-border-leaf-1# show ip route 192.168.2.0/24 vrf BLUE

IP Route Table for VRF "BLUE"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

192.168.2.0/24, ubest/mbest: 1/0

*via 100.127.1.2%default, [200/0], 19:30:59, bgp-65200, internal, tag 65200,

segid: 3003000 tunnelid: 0x647f0102 encap: VXLANdc2-leaf-1# show ip route 192.168.2.0/24 vrf BLUE

IP Route Table for VRF "BLUE"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

192.168.2.0/24, ubest/mbest: 1/0, attached

*via 192.168.2.1, Vlan2000, [0/0], 20:00:30, direct, tag 3000This traffic never reaches the FW on the way there and the same behavior happens on the return path. I’m not going to show every hop on the way as it’s identical but in reverse.

Intra vrf Intra DC

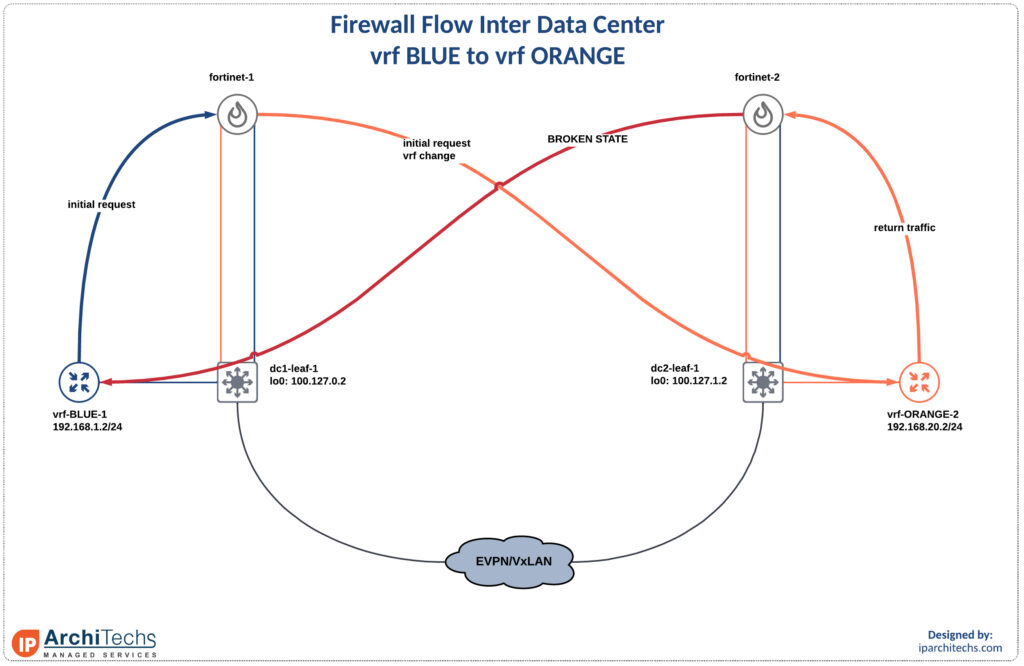

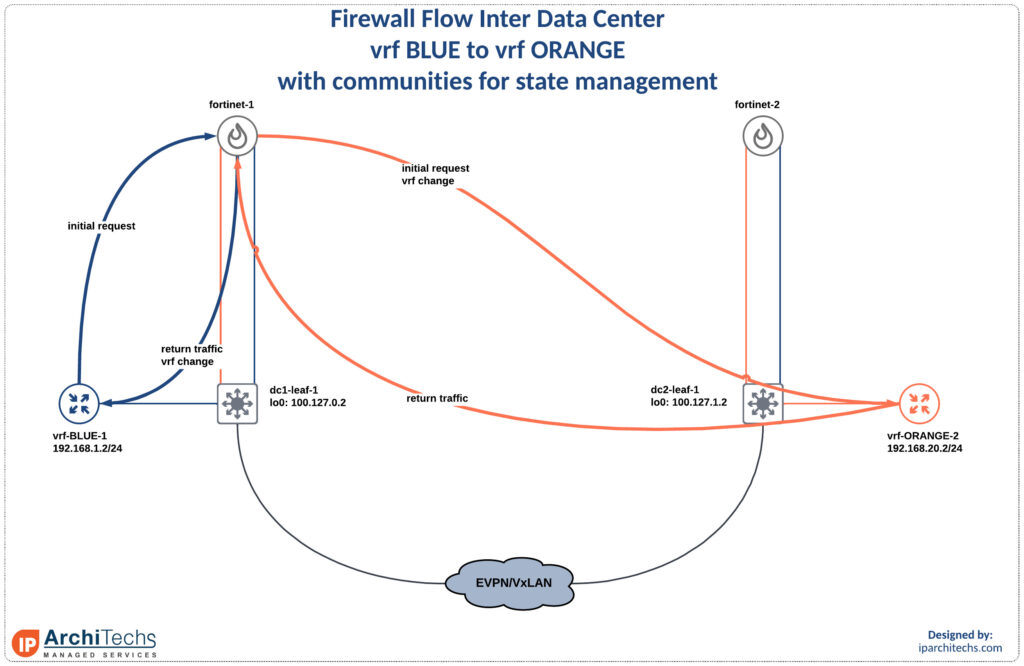

Here is the flow that causes a problem. When you change vrfs and change DCs without any other considerations there is an asymmetric path which introduces a state problem. After defining and analyzing the problem here we’ll walk through a solution.

vrf-BLUE-1#ping 192.168.20.2

Type escape sequence to abort.

Sending 5, 100-byte ICMP Echos to 192.168.20.2, timeout is 2 seconds:

.....

Success rate is 0 percent (0/5)Initial request

dc1-leaf-1# show ip route 192.168.20.0/24 vrf BLUE

IP Route Table for VRF "BLUE"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

192.168.20.0/24, ubest/mbest: 1/0

*via 172.16.0.1, [20/0], 17:50:50, bgp-65100, external, tag 65110Fortinet-1 routing table

vrf change has occurred and we’re now in vrf Orange after starting in vrf Blue

dc1-leaf-1# show ip route 192.168.20.0/24 vrf ORANGE

IP Route Table for VRF "ORANGE"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

192.168.20.0/24, ubest/mbest: 1/0

*via 100.127.0.255%default, [200/1], 18:54:34, bgp-65100, internal, tag 6520

0, segid: 3003001 tunnelid: 0x647f00ff encap: VXLANwe’re going to skip the border gateways as nothing excited happens there.

dc2-leaf-1# show ip route 192.168.20.0/24 vrf ORANGE

IP Route Table for VRF "ORANGE"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

192.168.20.0/24, ubest/mbest: 1/0, attached

*via 192.168.20.1, Vlan2001, [0/0], 18:58:30, direct, tag 3001Now we hit the connected route on dc2-leaf-1 as we expected. Remember that we initiated state on fortinet-1.

Return traffic

Okay, now that we made it to vrf-ORANGE-2 what happens to the return traffic.

dc2-leaf-1# show ip route 192.168.1.0/24 vrf ORANGE

IP Route Table for VRF "ORANGE"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

192.168.1.0/24, ubest/mbest: 1/0

*via 172.16.1.5, [20/0], 17:47:05, bgp-65200, external, tag 65210Fortinet-2 routing table

The first thing that the return traffic does is try to switch vrf’s back to vrf BLUE. However, fortinet-2 doesn’t have state for this flow. Since vrf-ORANGE can’t initiate communication with vrf-BLUE and there is no state in fortinet-2 the traffic is dropped on the default rule.

The Solution

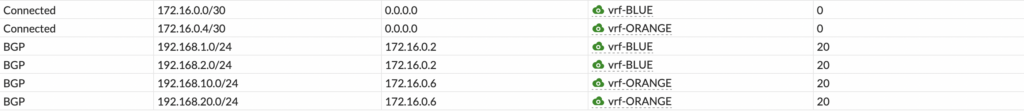

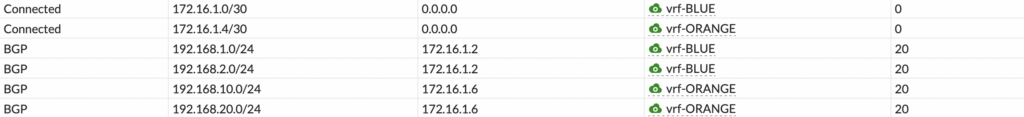

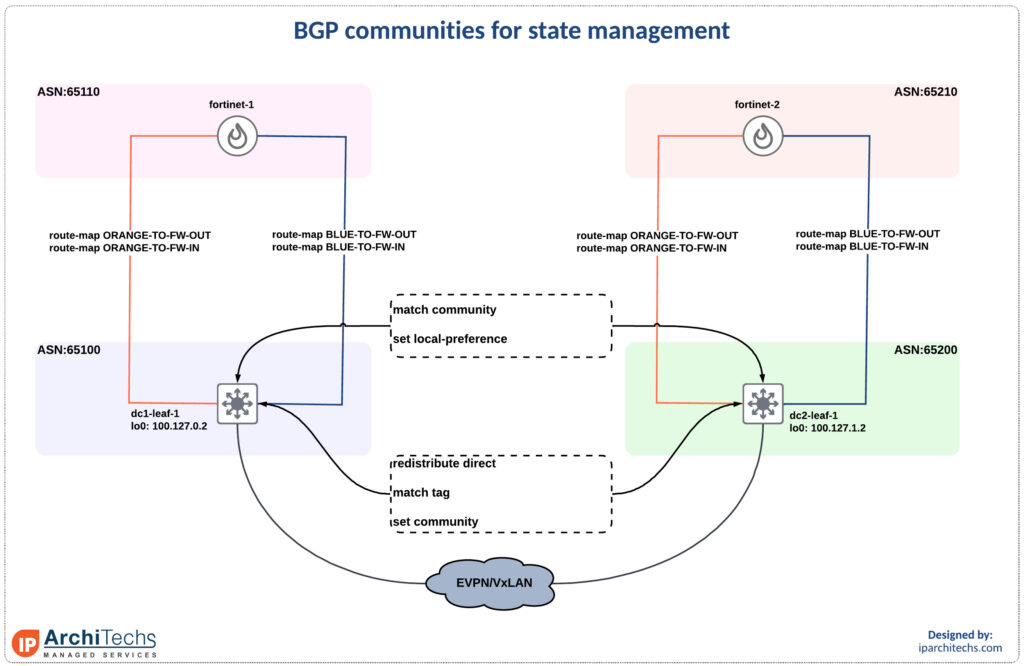

The first thing we’re going to do is set a community on generation of the type-5 route. This is done by matching a tag of the $L3VNI-VLAN-ID and setting a community of $ASN:$L3VNI-VLAN-ID.

vlan 2000

name BLUE-DATA

vn-segment 2002000

vlan 2001

name ORANGE-DATA

vn-segment 2002001

vlan 3000

name VRF-BLUE

vn-segment 3003000

vlan 3001

name VRF-ORANGE

vn-segment 3003001

route-map RM-CON-BLUE permit 10

match tag 3000

set community 65100:3000

route-map RM-CON-ORANGE permit 10

match tag 3001

set community 65100:3001

vrf context BLUE

vni 3003000

rd auto

address-family ipv4 unicast

route-target both auto

route-target both auto evpn

vrf context ORANGE

vni 3003001

rd auto

address-family ipv4 unicast

route-target both auto

route-target both auto evpn

interface Vlan2000

no shutdown

vrf member BLUE

ip address 192.168.1.1/24 tag 3000

fabric forwarding mode anycast-gateway

interface Vlan2001

no shutdown

vrf member ORANGE

ip address 192.168.10.1/24 tag 3001

fabric forwarding mode anycast-gateway

interface Vlan3000

no shutdown

vrf member BLUE

ip forward

interface Vlan3001

no shutdown

vrf member ORANGE

ip forwardBy setting the logic correctly we can force the traffic to always utilize the FW from the datacenter it originated from.

dc1-leaf-1# show run rpm

!Command: show running-config rpm

!Running configuration last done at: Sun Mar 20 15:08:38 2022

!Time: Sun Mar 20 15:43:29 2022

version 9.3(3) Bios:version

ip community-list standard DC1-BLUE-CL seq 10 permit 65100:3000

ip community-list standard DC1-ORANGE-CL seq 10 permit 65100:3001

ip community-list standard DC2-BLUE-CL seq 10 permit 65200:3000

ip community-list standard DC2-ORANGE-CL seq 10 permit 65200:3001

route-map BLUE-TO-FW-IN permit 10

match community DC1-ORANGE-CL

route-map BLUE-TO-FW-IN permit 20

match community DC2-ORANGE-CL

set local-preference 120

route-map BLUE-TO-FW-OUT permit 10

match community DC1-BLUE-CL DC2-BLUE-CL

route-map ORANGE-TO-FW-IN permit 10

match community DC1-BLUE-CL

route-map ORANGE-TO-FW-IN permit 20

match community DC2-BLUE-CL DC2-ORANGE-CL

set local-preference 80

route-map ORANGE-TO-FW-OUT permit 10

match community DC1-ORANGE-CL DC2-ORANGE-CL

route-map RM-CON-BLUE permit 10

match tag 3000

set community 65100:3000

route-map RM-CON-ORANGE permit 10

match tag 3001

set community 65100:3001

dc1-leaf-1# show run bgp

!Command: show running-config bgp

!Running configuration last done at: Sun Mar 20 15:08:38 2022

!Time: Sun Mar 20 15:44:05 2022

version 9.3(3) Bios:version

feature bgp

router bgp 65100

neighbor 100.127.0.0

remote-as 65100

update-source loopback0

address-family l2vpn evpn

send-community extended

vrf BLUE

address-family ipv4 unicast

advertise l2vpn evpn

redistribute direct route-map RM-CON-BLUE

neighbor 172.16.0.1

remote-as 65110

address-family ipv4 unicast

send-community

route-map BLUE-TO-FW-IN in

route-map BLUE-TO-FW-OUT out

vrf ORANGE

address-family ipv4 unicast

redistribute direct route-map RM-CON-ORANGE

neighbor 172.16.0.5

remote-as 65110

address-family ipv4 unicast

send-community

route-map ORANGE-TO-FW-IN in

route-map ORANGE-TO-FW-OUT outdc2-leaf-1# show run rpm

!Command: show running-config rpm

!Running configuration last done at: Sun Mar 20 15:13:30 2022

!Time: Sun Mar 20 15:45:25 2022

version 9.3(3) Bios:version

ip community-list standard DC1-BLUE-CL seq 10 permit 65100:3000

ip community-list standard DC1-ORANGE-CL seq 10 permit 65100:3001

ip community-list standard DC2-BLUE-CL seq 10 permit 65200:3000

ip community-list standard DC2-ORANGE-CL seq 10 permit 65200:3001

route-map BLUE-TO-FW-IN permit 10

match community DC2-ORANGE-CL

route-map BLUE-TO-FW-IN permit 20

match community DC1-ORANGE-CL

set local-preference 120

route-map BLUE-TO-FW-OUT permit 10

match community DC1-BLUE-CL DC2-BLUE-CL

route-map ORANGE-TO-FW-IN permit 10

match community DC2-BLUE-CL

route-map ORANGE-TO-FW-IN permit 20

match community DC1-BLUE-CL DC1-ORANGE-CL

set local-preference 80

route-map ORANGE-TO-FW-OUT permit 10

match community DC1-ORANGE-CL DC2-ORANGE-CL

route-map RM-CON-BLUE permit 10

match tag 3000

set community 65200:3000

route-map RM-CON-ORANGE permit 10

match tag 3001

set community 65200:3001

dc2-leaf-1# show run bgp

!Command: show running-config bgp

!Running configuration last done at: Sun Mar 20 15:13:30 2022

!Time: Sun Mar 20 15:45:40 2022

version 9.3(3) Bios:version

feature bgp

router bgp 65200

neighbor 100.127.1.0

remote-as 65200

update-source loopback0

address-family l2vpn evpn

send-community

send-community extended

vrf BLUE

address-family ipv4 unicast

redistribute direct route-map RM-CON-BLUE

neighbor 172.16.1.1

remote-as 65210

address-family ipv4 unicast

send-community

route-map BLUE-TO-FW-IN in

route-map BLUE-TO-FW-OUT out

vrf ORANGE

address-family ipv4 unicast

redistribute direct route-map RM-CON-ORANGE

neighbor 172.16.1.5

remote-as 65210

address-family ipv4 unicast

send-community

route-map ORANGE-TO-FW-IN in

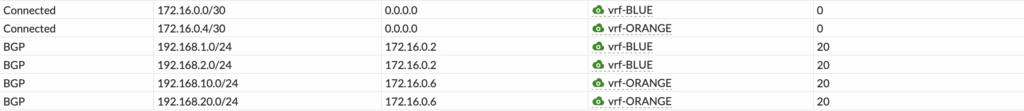

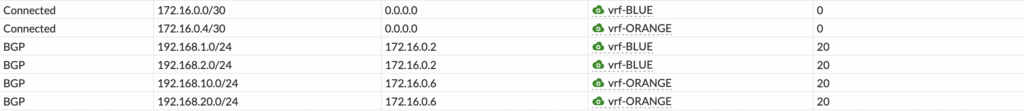

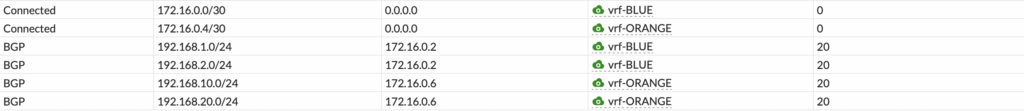

route-map ORANGE-TO-FW-OUT outHere is the result of this implementation

vrf-BLUE-1#ping 192.168.20.2

Type escape sequence to abort.

Sending 5, 100-byte ICMP Echos to 192.168.20.2, timeout is 2 seconds:

!!!!!

Success rate is 100 percent (5/5), round-trip min/avg/max = 16/18/22 msLets look at the routing tables now.

dc1-leaf-1# show ip route 192.168.20.0/24 vrf BLUE

IP Route Table for VRF "BLUE"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

192.168.20.0/24, ubest/mbest: 1/0

*via 172.16.0.1, [20/0], 00:40:23, bgp-65100, external, tag 65110fortinet-1 routing table

We changed vrfs to vrf ORANGE now.

dc1-leaf-1# show ip route 192.168.20.0/24 vrf ORANGE

IP Route Table for VRF "ORANGE"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

192.168.20.0/24, ubest/mbest: 1/0

*via 100.127.0.255%default, [200/1], 20:54:07, bgp-65100, internal, tag 6520

0, segid: 3003001 tunnelid: 0x647f00ff encap: VXLAN

again we’ll skip over the border gateways

dc2-leaf-1# show ip route 192.168.20.0/24 vrf ORANGE

IP Route Table for VRF "ORANGE"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

192.168.20.0/24, ubest/mbest: 1/0, attached

*via 192.168.20.1, Vlan2001, [0/0], 20:57:54, direct, tag 3001Return traffic

Now the return traffic will go back to fortinet-1 where we have the original state instead of fortinet-2.

dc2-leaf-1# show ip route 192.168.1.0/24 vrf ORANGE

IP Route Table for VRF "ORANGE"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

192.168.1.0/24, ubest/mbest: 1/0

*via 100.127.1.255%default, [200/2000], 00:43:36, bgp-65200, internal, tag 6

5100, segid: 3003001 tunnelid: 0x647f01ff encap: VXLANskipping over the border gateways we land back at dc1-leaf-1

dc1-leaf-1# show ip route 192.168.1.0/24 vrf ORANGE

IP Route Table for VRF "ORANGE"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

192.168.1.0/24, ubest/mbest: 1/0

*via 172.16.0.5, [20/0], 19:54:44, bgp-65100, external, tag 65110and we arrived back at fortinet-1 where we have a valid session.

switch vrfs back to vrf BLUE and hit the connected route

dc1-leaf-1# show ip route 192.168.1.0/24 vrf BLUE

IP Route Table for VRF "BLUE"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

192.168.1.0/24, ubest/mbest: 1/0, attached

*via 192.168.1.1, Vlan2000, [0/0], 22:19:11, direct, tag 3000Conclusion

That was a lot of work to meet the goal of utilizing both data centers, allowing vrf to vrf communication below firewalls, and not breaking state.

However, it is manageable. It also gives a few other benefits such as:

- being able to take an entire DCs firewall stack offline and not losing connectivity.

- less load on FWs

- less FW rule complexity

But with this comes increased routing complexity. So as always there are tradeoffs! Make sure you analyze them against your business needs before proceeding.

If you’d like to know more or need help with that contact us at IP Architechs.

+1 (855) 645-7684

+1 (855) 645-7684