Members of the IPA team were pleased to assist NANOG 94, where we found lots of food, excellent drinks, great friends and colleagues, and state-of-the-art talks on many topics related to networking. This edition was focused on datacenters, protocols, automation, platforms and of course, AI.

AI development and adoption is still on the hype, and it doesn’t seem to be slowing at any time soon, but rather accelerating. One of the talks regarding this topic was “GenAI powered Network Automation”, which asked us a very interesting question:

- Can LLM Agents be Network Operators?

Network troubleshooting can (and should) be a structured approach, based on standards and best practices, which hopefully can get us to a standardized methodology that we can apply to most vendors, systems and platforms. This structured methodology not only improves consistency in how issues are diagnosed and resolved but also enhances collaboration across teams.

By basing our efforts on established standards and industry best practices, we can reduce guesswork, minimize downtime, and increase efficiency. I mean, hey, I would really like to keep your phone not ringing with emergencies.

But all of us know that there will be bumps on the road. Is AI up to this task? Or at least, give us a helping hand?

Of course, as with many new technologies over the years, there was excitement, consensus and disbelief, so we thought we would give it a try.

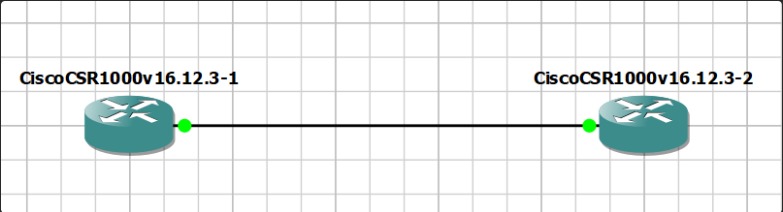

Preparing our test environment

Our objective here is to challenge the ability of some consumer AI tools, with a very simple scenario.

Two routers, running IOS-XE, a few addresses v4 and v6 addresses, OSPF and OSPFv3 as IGP, and iBGP between them.

By breaking protocols and running status, we expect the agents to be able to restore the network to a functioning state – with no previous information about the network.

On this approach, we will ask Gemini and ChatGPT the same questions

Configuration for both test routers is as simple as it gets..

Router 1 Config

hostname R1

ipv6 unicast-routing

interface lo0

ip address 192.168.1.1 255.255.255.255

ipv6 address 2001:db8::192:168:1:1/128

ipv6 ospf 1 area 0

interface gi1

ip address 10.1.2.1 255.255.255.252

ipv6 address 2001:db8::2001:10:1:2:1/64

ipv6 ospf 1 area 0

router ospf 1

router-id 192.168.1.1

passive-interface default

no passive-interface gi1

network 192.168.1.1 0.0.0.0 area 0

network 10.1.2.0 0.0.0.3 area 0

router ospfv3 1

router-id 192.168.1.1

passive-interface default

no passive-interface gi1

network 192.168.1.0 0.0.0.0 area 0

network 10.1.2.0 0.0.0.3 area 0Router 2 Config

hostname R2

ipv6 unicast-routing

interface lo0

ip address 192.168.1.2 255.255.255.255

ipv6 address 2001:db8::192:168:1:2/128

ipv6 ospf 1 area 0

interface gi1

ip address 10.1.2.2 255.255.255.252

ipv6 address 2001:db8::2001:10:1:2:2/64

ipv6 ospf 1 area 0

router ospf 1

router-id 192.168.1.2

passive-interface default

no passive-interface gi1

network 192.168.1.2 0.0.0.0 area 0

network 10.1.2.0 0.0.0.3 area 0

router ospfv3 1

router-id 192.168.1.2

passive-interface default

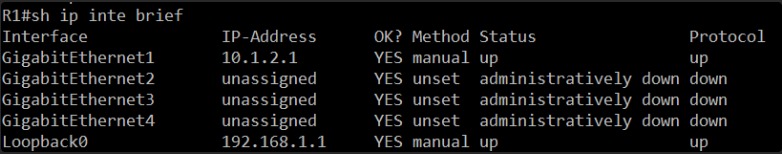

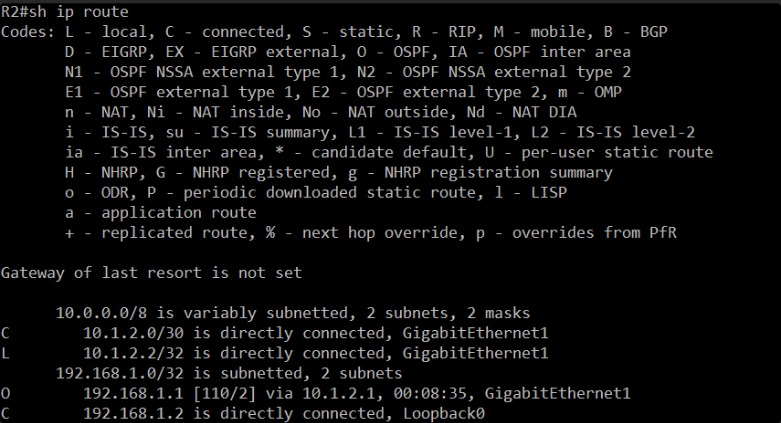

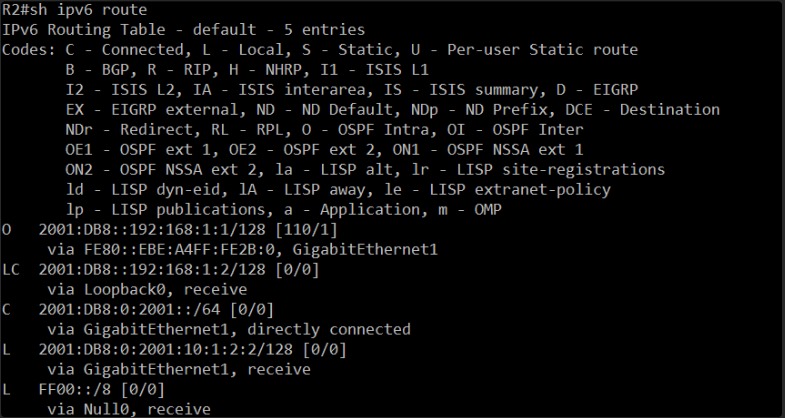

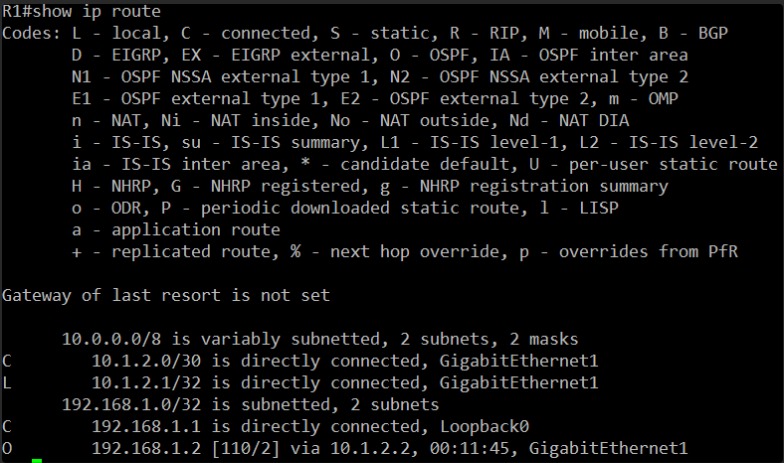

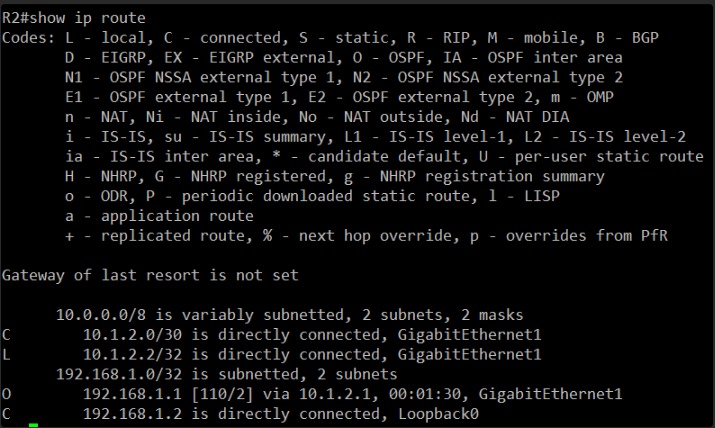

no passive-interface gi1IPv4 and IPv6 Routing tables

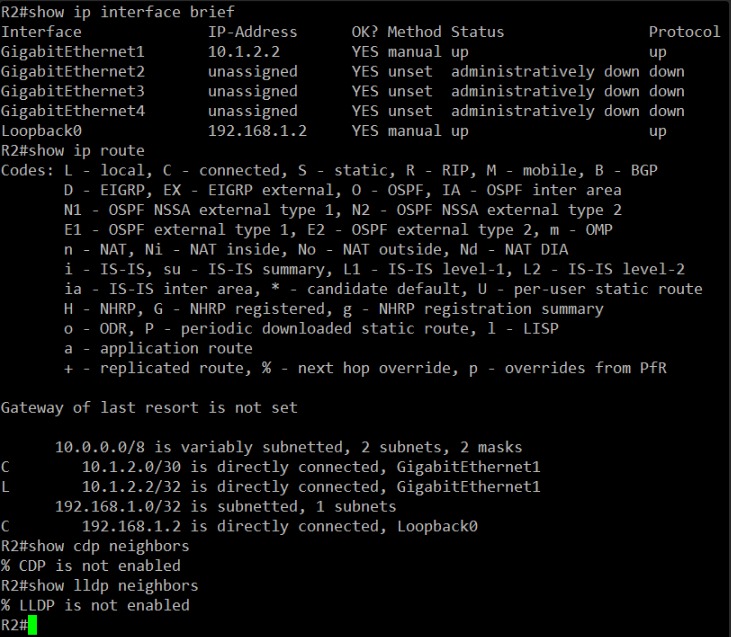

The previous config gets the network into the following state. We are aware of this, but we won’t feed the AI agents with this information. We wanna challenge them.

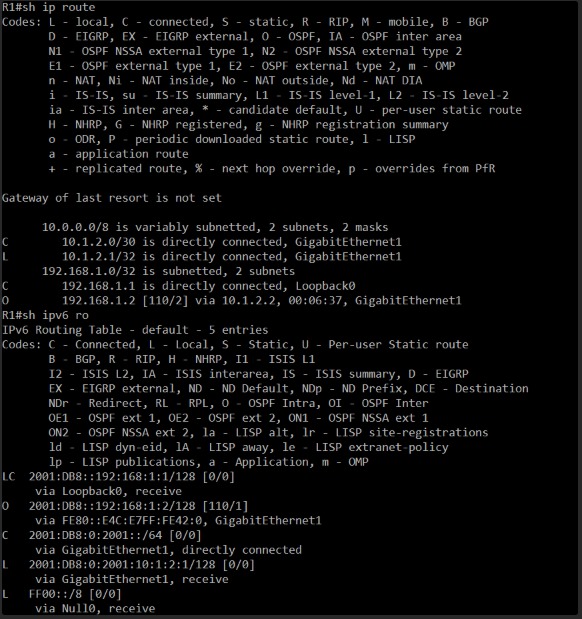

Converting AI agents into Network Engineers

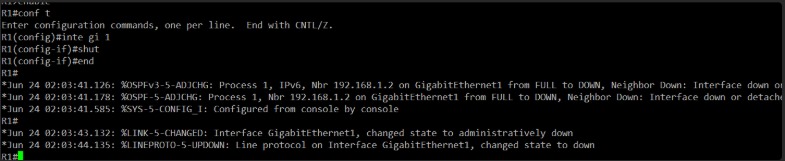

For this testing, we will challenge Gemini and ChatGPT into some broken scenarios. I’ll start by shutting down Gi1 interface at Router1.

And of course, we need to prompt them so the models can understand what we are doing.

You are a network engineer. Your task is to help in tshoot and hopefully fix issues on your own. The only thing we know is something is broken between the communication between 2 routers. We dont have any knowledge of the previous state of the network, and lucky us, we can get CLI into both devices and check and change config. I dont want you to give me instructions but rather act as the engineer itself. I will just be your interface between your train of thought and the command line.

How would you like to proceed?

Gemini as a network operator – Full conversation

https://g.co/gemini/share/6fe5b52e8424

Okay, understood. I’m the network engineer, and you’re my interface to the devices. My goal is to troubleshoot and fix the communication issue between these two routers, starting from a blank slate regarding their previous state.

First, I need to establish a baseline. I’ll start by checking the basic connectivity and configuration on both routers.

My initial approach will be:

1. Verify Physical Connectivity and Interface Status:

- Are the interfaces up/up?

- Are there any obvious errors on the interfaces (CRC, input errors, etc.)?

2. Check IP Addressing:

- Are the interfaces on the same subnet?

- Is the subnet mask correct?

3. Test Basic Reachability (Ping):

- Can each router ping its directly connected neighbor’s interface IP?

4. Check Routing Table:

- Do both routers have routes to reach each other’s networks?

- Are there any default routes that might be misdirecting traffic?

- What routing protocols are in use, if any?

5. Inspect for ACLs or Firewall Rules:

- Are there any access control lists or firewall rules blocking traffic between the two routers?

6. Review Log Messages:

- Are there any relevant error messages in the router logs that indicate a problem?

Given this, my first step will be to log into both routers and check the interface status and IP configuration.

What is the hostname or IP address of the first router you’d like me to investigate? Let’s call them Router A and Router B for now. I’ll need to know which one I’m on when I issue commands.

first router is R1, the other is R2

I can get cli now into R1

Excellent. I’m now on R1.

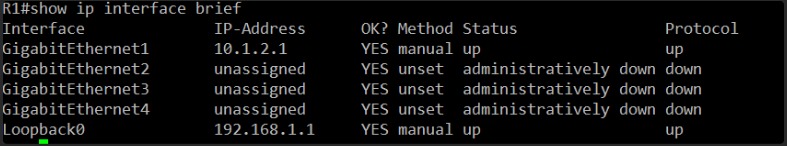

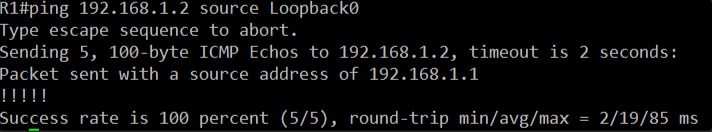

My first command will be to check the interface status and IP configuration.

Please issue the following command on R1:

show ip interface brief

This will give me a quick overview of all interfaces, their IP addresses, and their current status (up/down, administratively down).

Okay, this is a critical piece of information.

On R1, the GigabitEthernet1 interface, which has the IP address 10.1.2.1, is in an “administratively down” state. This means it’s been manually shut down. The other GigabitEthernet interfaces are also administratively down and unassigned. The Loopback0 interface is up, but that’s not the interface connecting to R2.

This is likely the root cause of the communication issue. We need to bring up the interface that connects to R2. Assuming GigabitEthernet1 is the interface intended to connect to R2 (given its IP 10.1.2.1), we need to enable it.

My next step is to bring up GigabitEthernet1 on R1.

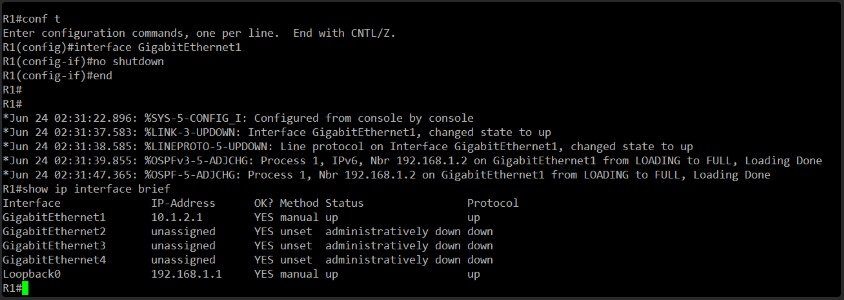

Please enter the following commands on R1:

configure terminal

interface GigabitEthernet1

no shutdown

end

copy running-config startup-config (to save the change)

After this, I’ll need to re-check the interface status on R1.

done, what should i do next

Great! Now that we’ve issued the no shutdown command on GigabitEthernet1 on R1 and saved the configuration, the immediate next step is to verify if the interface has come up.

Please issue the show ip interface brief command on R1 again.

I’m looking for the “Status” and “Protocol” columns for GigabitEthernet1 to both show “up”. If they do, it means Layer 1 and Layer 2 are operational on R1’s side.

Excellent! On R1, GigabitEthernet1 is now “up up”. This means the physical layer and data link layer are operational on R1’s side for that interface.

Now we need to do the same investigation on R2. The communication is between two routers, so both ends of the link need to be functional.

My next step is to investigate R2. Please get CLI access to R2.

Once on R2, I’ll need you to issue the same command we started with on R1:

show ip interface brief

This will give me the status of its interfaces and IP configuration, which is crucial for determining the next steps for R2.

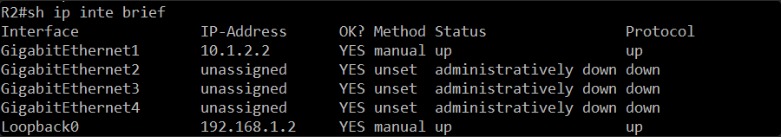

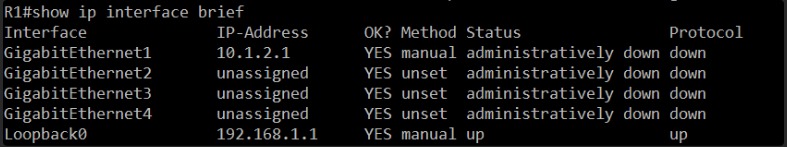

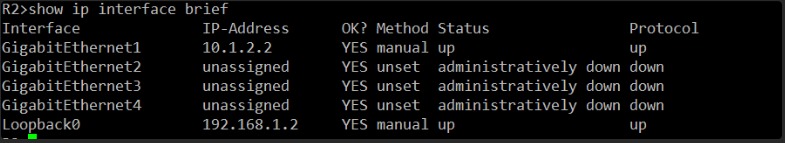

R2 looks like this

Alright, I see I have console access to R2.

Please press RETURN as prompted to get to the command prompt.

Once you’re at the prompt (it should look something like R2> or R2#), please issue the following command:

show ip interface brief

This will allow me to assess the status of R2’s interfaces, similar to what we did on R1.

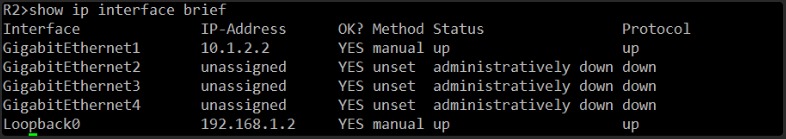

Okay, this is excellent news!

On R2, GigabitEthernet1 is also “up up” and has the IP address 10.1.2.2.

Let’s summarize:

- R1’s

GigabitEthernet1is10.1.2.1, status “up up”. - R2’s

GigabitEthernet1is10.1.2.2, status “up up”.

Both interfaces are in the same 10.1.2.0/24 subnet, and both are up at Layer 1 and Layer 2. This suggests our physical and data link layers are working correctly between these two interfaces.

Now that we have both ends of the link up and configured with IP addresses in the same subnet, the next logical step is to test basic IP reachability between them.

My next step is to attempt a ping from R2 to R1’s GigabitEthernet1 interface.

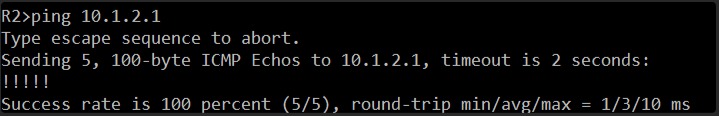

Please issue the following command on R2:

ping 10.1.2.1

Tell me the results of the ping. This will confirm Layer 3 connectivity.

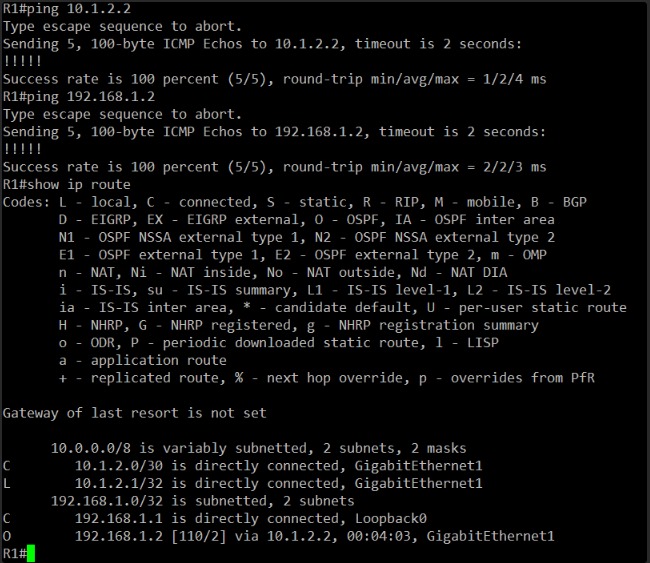

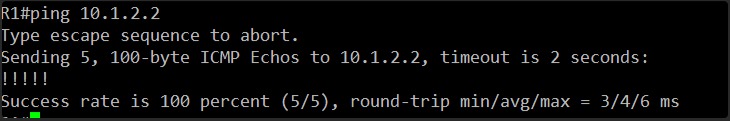

Excellent! We have 100% success rate on the ping from R2 to R1’s GigabitEthernet1 interface (10.1.2.1). The !!!!! output confirms that all 5 ICMP echo requests were successful.

This means that basic Layer 3 connectivity is established and working correctly between the GigabitEthernet1 interfaces of R1 and R2. The immediate communication issue between the two directly connected interfaces is resolved.

Now, the initial problem description was “something is broken between the communication between 2 routers.” While we’ve fixed the direct link, this doesn’t necessarily mean all communication between the routers (e.g., between their internal networks or loopbacks) is resolved if there are other networks involved.

My next step is to investigate the routing tables on both R1 and R2 to ensure they know how to reach each other’s other networks (like the Loopback0 interfaces we saw earlier) or any other networks that might be behind them. Without knowledge of the network topology, I’ll start with the Loopback0 interfaces as an example.

First, let’s check the routing table on R1.

Please issue the following command on R1:

show ip route

I’ll be looking for routes to 192.168.1.2 (R2’s Loopback0) and any other expected networks.

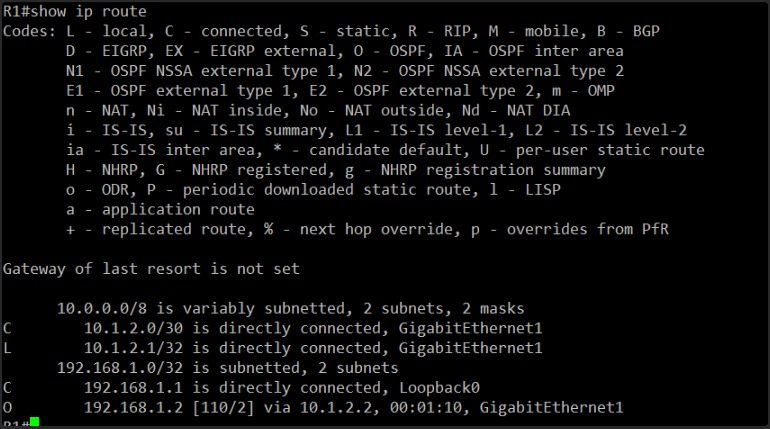

Okay, I see R1’s routing table.

Looking at the output, I see the following relevant entries:

C 10.1.2.1/32 is directly connected, GigabitEthernet1: This is R1’s own IP address on the link.L 10.1.2.1/32 is directly connected, GigabitEthernet1: This is the local route for the G1 interface.C 10.1.2.0/30 is directly connected, GigabitEthernet1: This is the directly connected network between R1 and R2.C 192.168.1.1 is directly connected, Loopback0: This is R1’s own Loopback0 interface.O 192.168.1.2 [110/2] via 10.1.2.2, 00:11:45, GigabitEthernet1: This is a very important entry! It shows that R1 has learned a route to192.168.1.2(which we know is R2’s Loopback0) via10.1.2.2(R2’s GigabitEthernet1) through OSPF. The00:11:45indicates this route has been learned recently.

The presence of the O (OSPF) route to 192.168.1.2 on R1 is a good sign. It tells me that OSPF is likely running and exchanging routing information between R1 and R2.

Now, I need to check the routing table on R2 to confirm it also has a route back to R1’s Loopback0 (192.168.1.1).

Please issue the following command on R2:

show ip route

This will help us confirm if two-way routing is established for these non-directly connected networks.

Excellent! Looking at R2’s routing table, I see the following:

C 10.1.2.0/30 is directly connected, GigabitEthernet1: The directly connected link network.L 10.1.2.2/32 is directly connected, GigabitEthernet1: R2’s own IP on the link.C 192.168.1.2 is directly connected, Loopback0: R2’s own Loopback0 interface.O 192.168.1.1 [110/2] via 10.1.2.1, 00:12:45, GigabitEthernet1: This confirms that R2 has also learned a route to R1’s Loopback0 (192.168.1.1) via R1’s GigabitEthernet1 IP (10.1.2.1) through OSPF.

This is exactly what we want to see. Both routers have routes to each other’s directly connected networks and to each other’s Loopback0 interfaces, and these routes are being learned via OSPF. This indicates that OSPF is functioning correctly and exchanging routes between R1 and R2.

Since we’ve:

- Brought up the interfaces on both sides.

- Verified direct Layer 3 connectivity with ping.

- Confirmed that OSPF is exchanging routes for the Loopback interfaces.

The next logical step is to attempt to ping from one router’s Loopback0 to the other router’s Loopback0. This will test end-to-end routing between the two routers beyond just the directly connected link.

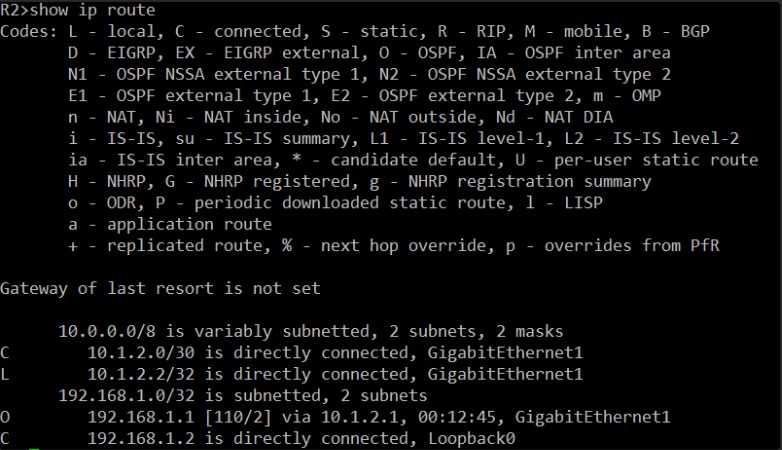

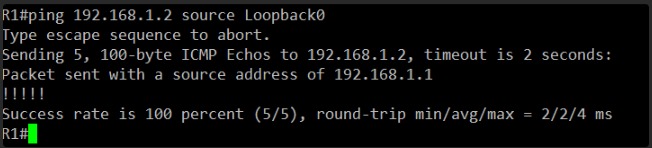

My next step: I’d like to attempt a ping from R1’s Loopback0 to R2’s Loopback0.

Please issue the following command on R1:

ping 192.168.1.2 source Loopback0

Tell me the results. If this ping is successful, it means the fundamental communication between the two routers is fully restored and routing is working as expected.-way routing is established for these non-directly connected networks.

Fantastic! We have a 100% success rate on the ping from R1’s Loopback0 to R2’s Loopback0. The !!!!! confirms all 5 echo requests were successful.

This is a strong indication that the communication issue between the two routers has been resolved. We’ve gone from an “administratively down” interface to full end-to-end IP connectivity and verified routing protocol operation (OSPF).

To summarize what we’ve done and the current state:

- Identified Root Cause on R1: Found

GigabitEthernet1on R1 was administratively down. - Remediation on R1: Brought

GigabitEthernet1on R1 up withno shutdown. - Verification on R1: Confirmed

GigabitEthernet1on R1 was “up up”. - Investigation on R2: Confirmed

GigabitEthernet1on R2 was already “up up” with an appropriate IP. - Basic Connectivity Test: Successfully pinged from R2’s

GigabitEthernet1to R1’sGigabitEthernet1, confirming Layer 3 reachability on the direct link. - Routing Table Verification: Confirmed both R1 and R2 had OSPF-learned routes to each other’s Loopback0 interfaces.

- End-to-End Connectivity Test: Successfully pinged from R1’s Loopback0 to R2’s Loopback0, demonstrating full routing capability between the two routers beyond the direct link.

At this point, based on the information provided and the successful pings, the core communication issue between R1 and R2 appears to be fixed.

Is there anything else specific you’d like me to check, or any other aspects of their communication that were originally failing? Otherwise, my assessment is that the problem is resolved.

Gemini’s approach

- Gemini just assumed we were talking Cisco routers. Not good.

- It never asked for config, or intended status of the network. Just wanted to set up whatever was down. And what about if it was down for a good reason?

- It also assumed we were running just IPv4

- It did a great job identifying the down interfaces, validating L3 reachability with pings and confirming OSPF routes.

ChatGPT as a network operator – Full conversation

https://chatgpt.com/share/685a0d29-4960-8006-9511-7429aa97598b

Perfect. Since we don’t know anything about the previous state, we’ll treat this as a black-box scenario and go step by step, starting from basic reachability and progressively checking control plane and data plane.

Let’s start with these assumptions:

- Two routers, A and B.

- Something’s broken between them.

- I can interact with their CLI through you.

Let’s begin with Router A.

Please run the following commands on Router A and share the outputs:

show ip interface briefshow ip routeping <Router B interface IP>(if known, or else we’ll find it from #1)show cdp neighborsorshow lldp neighbors(depending on what’s supported)

Let’s gather these basics, and we’ll go from there.

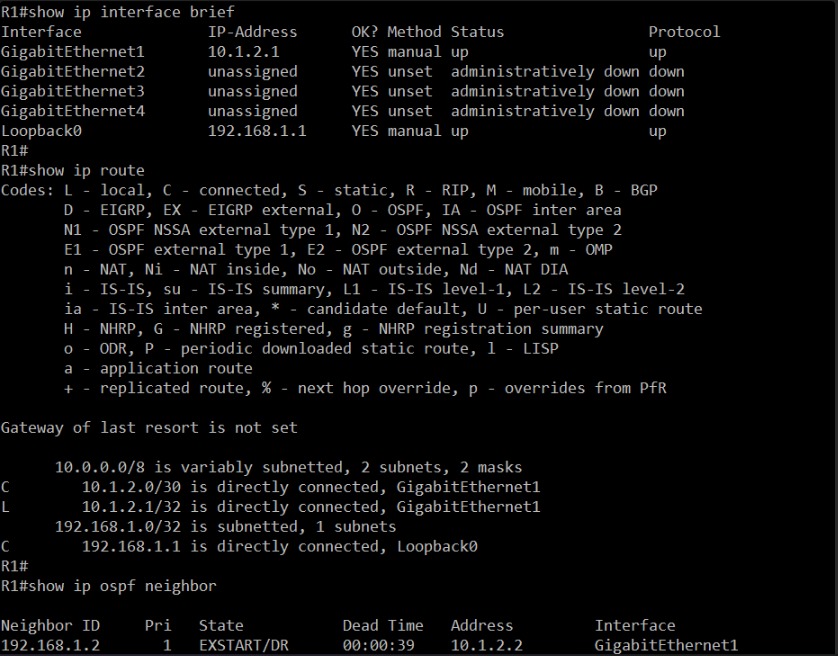

Thanks. Here’s what I see on Router A (R1):

- All physical interfaces (

Gi1throughGi4) are administratively down. - Only the loopback interface

Loopback0is up with IP192.168.1.1. - There are no routes other than the directly connected loopback.

- CDP and LLDP are both disabled, so we can’t auto-discover neighbors.

- There’s no interface on this router currently capable of communicating with anything external.

So at this point, R1 is isolated—it’s not connected to anything, so there’s no way it can reach Router B or any other device.

Let’s now check Router B.

Please run the same set of commands on Router B:

show ip interface briefshow ip routeshow cdp neighborsorshow lldp neighbors

Once I see the status of B, I’ll decide the next best step.

Excellent. Here’s what we can conclude from R2:

GigabitEthernet1is up and assigned10.1.2.2/30.Loopback0is up with192.168.1.2.- Routing table confirms that

10.1.2.0/30is directly connected. - This router is ready and active on

Gi1.

Now, going back to R1, it has 10.1.2.1 configured on its GigabitEthernet1, but that interface is administratively down. That’s our problem.

To bring communication up:

Enable GigabitEthernet1 on R1.

Please run this on R1:

conf t

interface GigabitEthernet1

no shutdown

endThen, confirm with:

show ip interface briefOnce that’s done, I’ll have you test connectivity.

Perfect — now GigabitEthernet1 on R1 is up, and we even see OSPF forming a full adjacency with neighbor 192.168.1.2, which is R2’s Loopback0. That tells us:

- Layer 1 and 2 are good (interface is up/up).

- Layer 3 is functional (OSPF adjacency established).

- OSPF is already configured and working between R1 and R2.

Now let’s validate full connectivity.

From R1, please run:

ping 10.1.2.2

ping 192.168.1.2

show ip route

This will confirm end-to-end reachability and whether OSPF has populated the routing table properly. Let’s check that next.

Excellent — here’s the current status:

- ICMP to both

10.1.2.2(R2’s interface) and192.168.1.2(R2’s loopback) is successful. - OSPF route to

192.168.1.2/32is present and marked asO(intra-area), with a metric of 2. - R1 has full Layer 3 connectivity to R2.

- OSPF adjacency is up and functioning.

Conclusion: The communication issue between the two routers is resolved.

The root cause was that GigabitEthernet1 on R1 was administratively shut down. Once brought up, OSPF automatically established adjacency and exchanged routes.

Let me know if you want to validate any specific protocol or service over this path now — or if we consider this troubleshooting session complete.

ChatGPT approach

- ChatGPT also assumed we were talking Cisco routers. Are there no other platforms around?

- It neither asked for config, or intended status of the network. Just wanted to set up whatever was down.

- It also assumed we were running just IPv4.

- Tried to figure out network topology with CDP and LLDP, I consider this a good attempt.

- It also figured out we were running OSPF, probably out of the informational messages.

- It did a great job identifying the down interfaces, validating L3 reachability with pings and confirming OSPF routes.

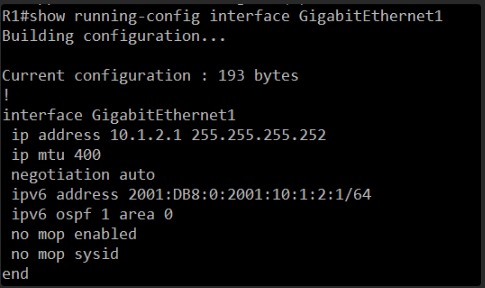

Breaking OSPF

I put a small change on Gi1, enough to break OSPF . By setting the IP MTU to 400, this will prevent adjacencies from forming.

R1#sh run inte gi1

Building configuration...

Current configuration : 193 bytes

!

interface GigabitEthernet1

ip address 10.1.2.1 255.255.255.252

ip mtu 400

negotiation auto

ipv6 address 2001:DB8:0:2001:10:1:2:1/64

ipv6 ospf 1 area 0Gemini fixing OSPF

the fix worked, but they report they changed something and now everything is down again

Well, that’s certainly a common scenario in networking! “Everything is down again” after “they changed something.” Our previous fix was solid for the state it was in, but now we’re back to square one, or rather, a new square one.

The good news is we have a working troubleshooting methodology. I’ll approach this as a fresh problem, but with the added context that a recent change caused the outage. This implies we might be looking for a new configuration error or an interface state change.

My immediate plan is to re-run our initial diagnostic steps, focusing on the most likely culprits given a “down again” report.

1. Verify Physical Connectivity and Interface Status (Again): This is the most crucial first step. If the interfaces are down, nothing else will work.

- Are the interfaces still up/up on both sides?

- Has an interface been inadvertently shut down again, or its IP changed?

2. Check IP Addressing (Again):

- Are the IP addresses on the connected interfaces still correct and in the same subnet?

Given the report, I’ll start exactly where we did last time. I need to get back on one of the routers.

Which router would you like me to start with this time, R1 or R2?

you can do as you want, i will just follow your instructions

Okay, since I have the freedom to choose, I’ll start with R1, as that’s where we found the initial problem last time. It’s a good place to start our investigation again.

Please get CLI access to R1.

Once you’re on R1, the very first command I need to issue is:

show ip interface brief

This will quickly show me the status of all interfaces and their IP configurations, which will tell us if the direct link is still operational or if something has been administratively shut down or reconfigured.

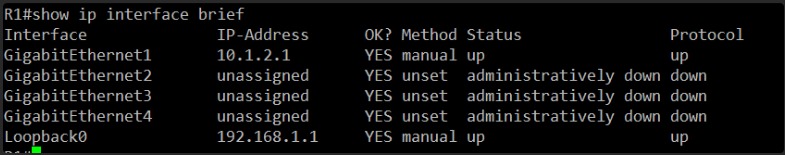

Alright, I’ve checked R1’s interface status.

The good news is that GigabitEthernet1 on R1 is still “up up” and has its IP address 10.1.2.1. The Loopback0 is also up. This suggests that R1’s side of the direct link hasn’t been administratively shut down or had its IP removed.

Now, we need to check R2. For communication to be “down again,” it’s highly likely that the change was made on R2, or it’s affecting R2’s side of the link.

My next step is to investigate R2. Please get CLI access to R2.

Once on R2, please issue the same command:

show ip interface brief

This will tell us the state of its interfaces and if the other end of our critical link has been altered.

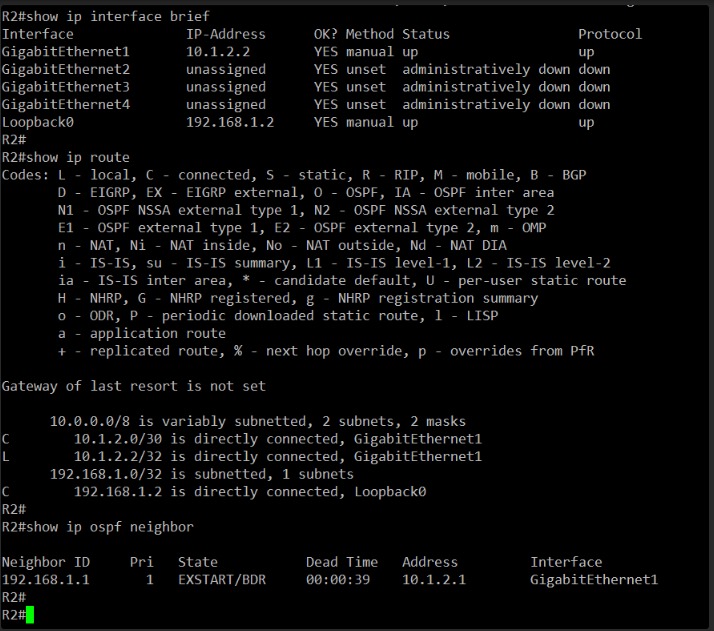

Okay, this is interesting.

On R2, GigabitEthernet1 is also “up up” and retains its 10.1.2.2 IP address. Loopback0 is also up.

So, both directly connected interfaces (R1’s Gi1 and R2’s Gi1) are up and have correct IP addresses in the 10.1.2.0/24 subnet. This means Layer 1 and Layer 2 are still solid, and the direct link should be functional.

If the “communication is down again,” but the direct link (Gi1 on both) is up, then the issue isn’t likely physical or basic IP addressing on the direct link. It points more towards a routing problem or an access control issue beyond the direct connection. Given that “they changed something,” it’s highly probable that a routing protocol configuration was altered, or perhaps an ACL was introduced.

My next step is to test basic reachability across the direct link from R1. While we know R2 can ping R1’s Gi1, let’s confirm R1 can ping R2’s Gi1 just to be absolutely certain the direct link is bidirectional.

Please get CLI access to R1 and issue the following command:

ping 10.1.2.2

After this, we’ll move on to checking the routing protocol status if the ping is successful.

Okay, another 100% successful ping!

This confirms that the direct Layer 3 link between R1’s GigabitEthernet1 (10.1.2.1) and R2’s GigabitEthernet1 (10.1.2.2) is fully operational and bidirectional.

Since basic connectivity on the direct link is verified, the problem lies higher up in the OSI model, most likely with routing, or possibly an ACL that’s specifically blocking traffic to/from other networks (like our Loopbacks). Given “they changed something,” a routing protocol misconfiguration is a very strong candidate.

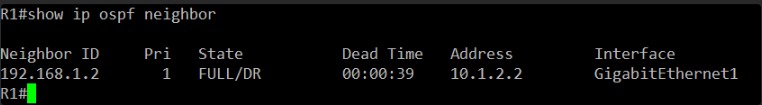

We previously identified OSPF as the routing protocol in use. My next step is to check the OSPF neighbor status on both routers. If OSPF neighbors aren’t forming or are flapping, that would explain why routes aren’t being exchanged, leading to “everything down.”

First, let’s check R1’s OSPF neighbor status.

Please issue the following command on R1:

show ip ospf neighbor

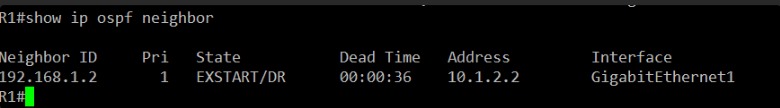

I’ll be looking for R2’s router ID and a “FULL” state, which indicates a healthy OSPF adjacency.

Aha! This is a critical piece of information and likely the source of the “down again” issue.

On R1, the OSPF neighbor state with 192.168.1.2 (which is R2’s Router ID) is EXSTART/DR. This is not a FULL state.

Here’s what EXSTART means:

- EXSTART: This is an early state in OSPF adjacency formation, where the routers are trying to determine which router will be the Master and which will be the Slave for the Database Description (DBD) exchange process. They haven’t even started exchanging full routing information yet.

The fact that it’s stuck in EXSTART indicates a problem with the OSPF negotiation process. Common reasons for getting stuck in EXSTART (or Exchg/Loading/Two-way) include:

- Mismatching MTU (Maximum Transmission Unit): If the MTU on the OSPF interfaces doesn’t match, routers won’t be able to exchange large OSPF packets (like DBDs) and will get stuck. This is a very common cause for this exact state.

- OSPF Authentication Mismatch: If OSPF authentication is configured, and there’s a mismatch in the key or type, adjacencies won’t form fully.

- Area ID Mismatch: Although less likely to get stuck specifically in EXSTART, an area ID mismatch would prevent full adjacency.

- Network Type Mismatch: (e.g., one side point-to-point, other broadcast)

- Access-List Blocking OSPF Traffic: While less common for EXSTART, if an ACL is blocking OSPF’s IP protocol 89, it could prevent adjacency.

Given the EXSTART state, the most probable cause is an MTU mismatch on the GigabitEthernet1 interfaces, especially since “they changed something.”

My next step is to check the MTU settings on the GigabitEthernet1 interface on both R1 and R2.

First, let’s check R1’s GigabitEthernet1 MTU.

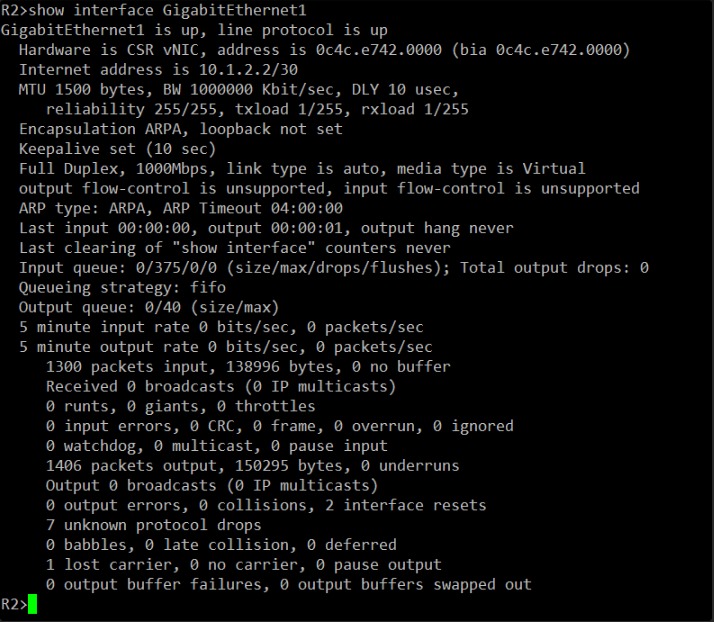

Please issue the following command on R1:

show interface GigabitEthernet1

I’ll be looking for the “MTU” value in the output.

Let’s hope this is the culprit!

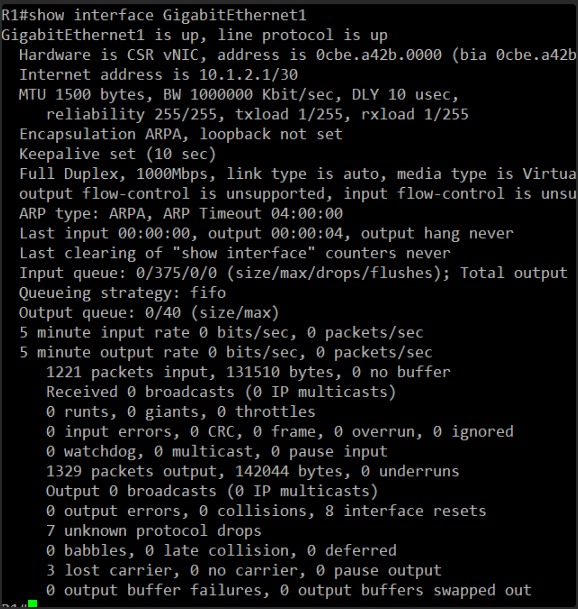

Okay, I see the output for show interface GigabitEthernet1 on R1.

I can clearly see:

MTU 1500 bytes

This is the standard Ethernet MTU. Now, we need to check the MTU on R2’s GigabitEthernet1 interface. If it’s anything other than 1500 bytes, we’ve found our problem.

Please get CLI access to R2 and issue the following command:

show interface GigabitEthernet1

I’ll be looking for the “MTU” value in the output from R2. This is very likely the cause of the EXSTART OSPF state.

This is unexpected.

On R2, the show interface GigabitEthernet1 output also shows:

MTU 1500 bytes

This means the MTU values on GigabitEthernet1 on both R1 and R2 are identical (1500 bytes). So, an MTU mismatch is not the cause of the EXSTART/DR OSPF state. My apologies for that misdirection, it’s a very common culprit in this scenario.

Since MTU is not the issue, we need to investigate other common reasons for OSPF adjacency issues. Given that “they changed something,” it’s highly likely to be a misconfiguration within OSPF itself, or perhaps a new ACL.

Let’s re-evaluate. The OSPF state is EXSTART, meaning they are struggling with the DBD (Database Description) exchange. This implies they’re not even fully sharing routing information.

My next step is to examine the OSPF configuration on both routers, specifically looking for:

- Area ID consistency

- Network statements to ensure the interfaces are participating in OSPF correctly.

- Any OSPF authentication settings that might have been introduced or changed.

- OSPF network types, though less likely to get stuck at EXSTART unless explicitly changed to a non-broadcast type on a broadcast medium without proper DR/BDR election.

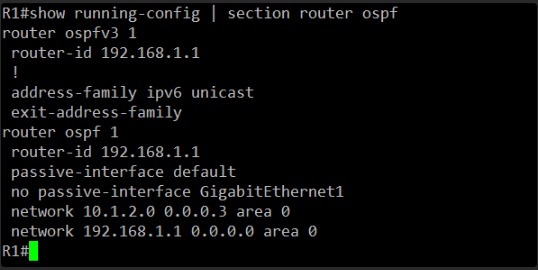

I’ll start with R1’s OSPF configuration.

Please issue the following command on R1:

show running-config | section router ospf

This will show us the OSPF configuration on R1. After that, we’ll do the same for R2 and compare them closely.

Okay, I see the OSPF configuration on R1.

Here’s what stands out:

router ospfv3 1: This indicates OSPFv3 is configured (for IPv6).router ospf 1: This indicates OSPFv2 is configured (for IPv4). We are troubleshooting IPv4 communication, so this is the relevant section.router-id 192.168.1.1: This is R1’s Loopback0 IP, which is a common and good practice for a router ID.passive-interface default: This is a significant finding. It means all interfaces are passive by default, meaning they will not send or receive OSPF Hellos.no passive-interface GigabitEthernet1: This explicitly overrides thepassive-interface defaultforGigabitEthernet1, which is correct. We want OSPF to run on this interface to form an adjacency with R2.network 10.1.2.0 0.0.0.3 area 0: This correctly includes the directly connected network between R1 and R2 in OSPF area 0.network 192.168.1.1 0.0.0.0 area 0: This includes R1’s Loopback0 in OSPF area 0.

From R1’s perspective, the OSPF configuration seems correct for establishing an adjacency on GigabitEthernet1 and advertising its Loopback0.

The EXSTART state strongly suggests a mismatch or problem on R2’s OSPF configuration. It’s possible that R2 has passive-interface default enabled without an exception for GigabitEthernet1, or perhaps its network statement is incorrect, or even a different OSPF process ID or area.

My next step is to examine R2’s OSPF configuration.

Please issue the following command on R2:

show running-config | section router ospf

I will compare this output to R1’s configuration to identify any discrepancies.

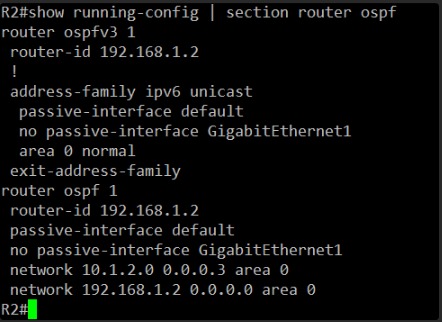

Alright, I’ve got R2’s OSPF configuration.

Let’s compare R1 and R2’s OSPFv2 (IPv4) configurations side-by-side:

R1 OSPFv2:

router ospf 1

router-id 192.168.1.1

passive-interface default

no passive-interface GigabitEthernet1

network 10.1.2.0 0.0.0.3 area 0

network 192.168.1.1 0.0.0.0 area 0

R2 OSPFv2:

router ospf 1

router-id 192.168.1.2

passive-interface default

no passive-interface GigabitEthernet1

network 10.1.2.0 0.0.0.3 area 0

network 192.168.1.2 0.0.0.0 area 0

Analysis:

- Router ID: Unique and correct for each router (192.168.1.1 on R1, 192.168.1.2 on R2).

- Process ID: Both are using

router ospf 1, which is consistent. - Passive Interfaces: Both have

passive-interface defaultand explicitlyno passive-interface GigabitEthernet1. This is correct and should allow OSPF Hellos on that interface. - Network Statements: Both have

network 10.1.2.0 0.0.0.3 area 0, correctly including the shared segment in Area 0. Both also include their respective Loopback0 interfaces in Area 0. - Area ID: Both are using

area 0.

Conclusion: Based on the show running-config output, the OSPFv2 configuration on both routers appears to be symmetrical and correct. This rules out common OSPF misconfigurations like area ID mismatches, process ID mismatches, or incorrect network statements.

So, if the configuration seems correct, but the OSPF neighbor state is EXSTART, there might be something more subtle at play. One possibility we haven’t fully ruled out is OSPF authentication or even a specific interface OSPF setting.

My next step is to examine the OSPF configuration directly on the GigabitEthernet1 interface on both routers, as well as checking the OSPF interface state.

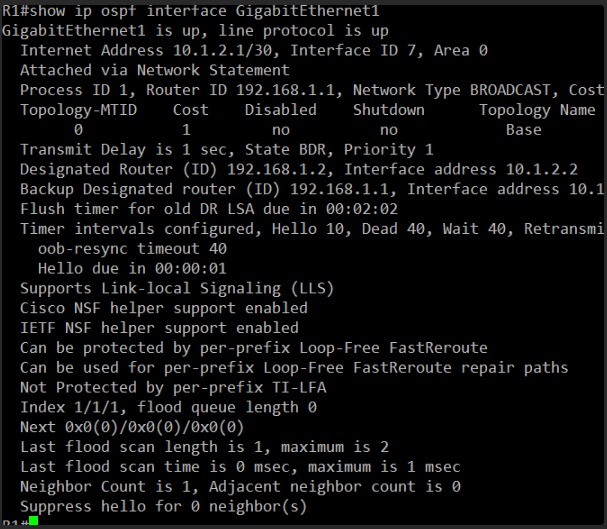

First, on R1:

show ip ospf interface GigabitEthernet1

Then, we’ll do the same for R2. This command will show us the OSPF-specific parameters on that interface, including the OSPF network type, cost, timers, and any authentication settings. This might reveal a hidden discrepancy.

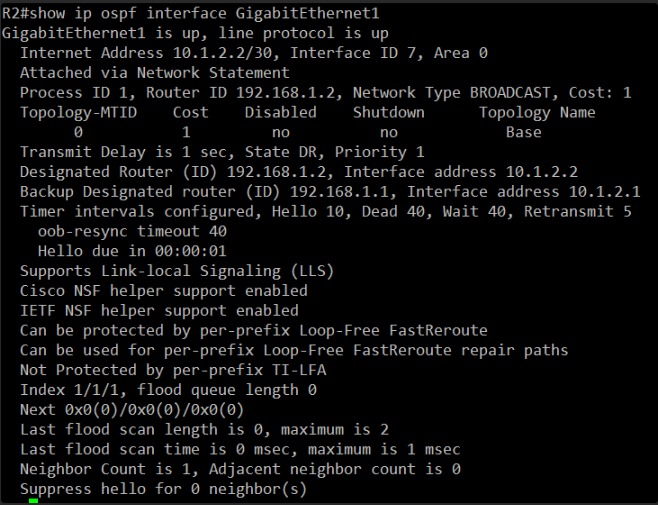

Okay, I’ve got the detailed OSPF interface information for R1’s GigabitEthernet1.

Here are the key points from R1’s perspective:

- Interface ID 7, Area 0 – Consistent with what we saw in the OSPF process config.

- Network Type BROADCAST – This is the default for Ethernet interfaces and is generally correct.

- Cost 1 – Default OSPF cost.

- Priority 1 – Default OSPF priority.

- Designated Router (ID) 192.168.1.2, Interface address 10.1.2.2 – R1 believes R2 is the DR.

- Backup Designated Router (ID) 192.168.1.1, Interface address 10.1.2.1 – R1 believes it is the BDR (which makes sense if R2 is the DR).

- Timer intervals configured, Hello 10, Dead 40 – Standard OSPF timers.

- Neighbor Count is 1, Adjacent neighbor count is 0 – This is the smoking gun! R1 sees one neighbor (R2), but it’s not adjacent. This directly correlates with the

EXSTARTstate we saw earlier. An adjacent count of 0 means the full adjacency hasn’t formed.

This confirms the problem is specifically with the OSPF adjacency not fully forming, despite seemingly correct basic config.

Now, we need to check R2’s GigabitEthernet1 OSPF interface details and compare them to R1. I’m specifically looking for any mismatches in:

- Network Type

- OSPF Timers (Hello/Dead)

- OSPF Authentication (if any is configured here)

Please get CLI access to R2 and issue the following command:

show ip ospf interface GigabitEthernet1

This should help us pinpoint the exact mismatch that is preventing the OSPF adjacency from reaching the FULL state.

Okay, I’ve thoroughly reviewed the show ip ospf interface GigabitEthernet1 output for R2.

Let’s compare the key OSPF interface parameters between R1 and R2:

R1 GigabitEthernet1 OSPF parameters:

- Network Type: BROADCAST

- Cost: 1

- Priority: 1

- Designated Router (ID): 192.168.1.2 (R2), Interface address 10.1.2.2

- Backup Designated Router (ID): 192.168.1.1 (R1), Interface address 10.1.2.1

- Timer intervals: Hello 10, Dead 40

- Neighbor Count: 1, Adjacent neighbor count: 0

R2 GigabitEthernet1 OSPF parameters:

- Network Type: BROADCAST

- Cost: 1

- Priority: 1

- Designated Router (ID): 192.168.1.2 (R2), Interface address 10.1.2.2

- Backup Designated Router (ID): 192.168.1.1 (R1), Interface address 10.1.2.1

- Timer intervals: Hello 10, Dead 40

- Neighbor Count: 1, Adjacent neighbor count: 0

Analysis:

All the critical OSPF parameters on the GigabitEthernet1 interfaces match perfectly between R1 and R2:

- Network Type (BROADCAST)

- OSPF Timers (Hello 10, Dead 40)

- Cost and Priority

- Both recognize each other as DR/BDR correctly.

However, the problem persists: both routers show “Adjacent neighbor count: 0”. This means they are seeing Hellos from each other (hence “Neighbor Count: 1”), but they are failing to progress beyond the EXSTART state to form a full adjacency.

Since MTU is confirmed to be matching, and the most common OSPF interface and process parameters are matching, the remaining highly probable causes for an EXSTART state or failure to reach FULL are:

- OSPF Authentication Mismatch: This is now my prime suspect. If one side has authentication configured (and the other doesn’t, or has a different key/type), OSPF packets will be dropped after the initial hello exchange.

- Access-List Blocking OSPF Traffic: An ACL could be specifically blocking OSPF protocol traffic (IP protocol 89) between the interfaces.

- Specific OSPF Design Issues (less likely with just two routers directly connected): Like mismatched stub area flags, although this typically manifests in later states.

Given that “they changed something,” my strongest suspicion is now OSPF authentication. It’s easy to misconfigure or apply it on one side and not the other.

My next step is to check for OSPF authentication configuration on the GigabitEthernet1 interface of both R1 and R2.

First, on R1:

show running-config interface GigabitEthernet1

I’ll be looking for lines like ip ospf authentication-key or ip ospf authentication message-digest. After that, we’ll check R2 for the same.

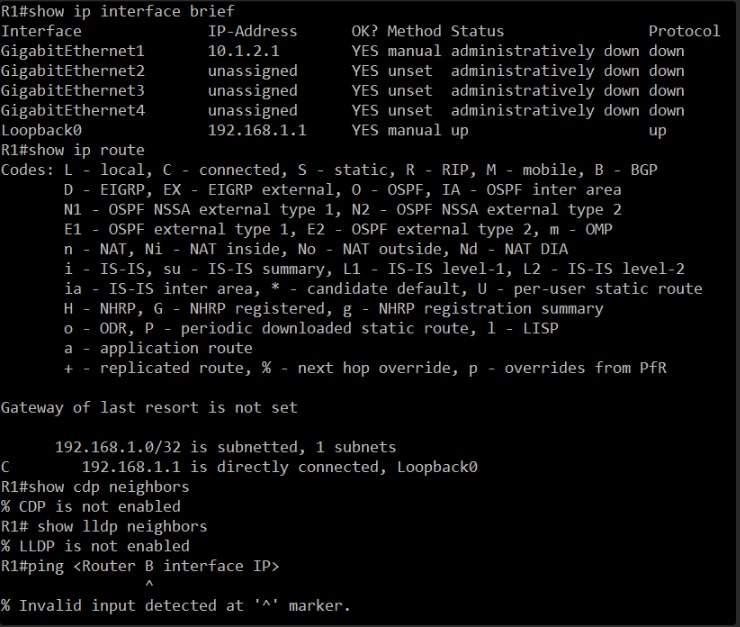

This is it! We found the problem!

Look at this line in R1’s show running-config interface GigabitEthernet1 output:

ip mtu 400

This is a manual configuration setting for the IP MTU on this interface. This is different from the Layer 2 MTU we checked earlier (which was 1500 bytes and represents the physical frame size). The ip mtu command specifically tells OSPF and other IP processes what MTU to use for IP packets.

While the show interface GigabitEthernet1 output showed MTU 1500 bytes (referring to the physical MTU), the ip mtu 400 command overrides the IP-specific MTU that OSPF uses for its DBD packets.

An OSPF EXSTART state where neighbors are seen but no adjacency forms is a classic symptom of an IP MTU mismatch. OSPF DBD packets contain information about the LSA database, and if they exceed the configured ip mtu on the receiving end, they’re dropped, preventing the adjacency from fully forming.

The standard IP MTU for Ethernet is 1500 bytes. R1 is configured with 400 bytes, which is extremely small and definitely the cause of the problem.

Now, we need to correct this on R1.

My next step is to remove the incorrect ip mtu 400 command from R1’s GigabitEthernet1 interface. This will revert it to the default IP MTU of 1500 bytes, matching R2.

Please issue the following commands on R1:

configure terminal

interface GigabitEthernet1

no ip mtu

end

copy running-config startup-config

After this, we will immediately check the OSPF neighbor status on R1 again to see if the adjacency comes up. This should fix the issue.

done, what should i do now

Excellent! The no ip mtu command on R1’s GigabitEthernet1 interface should have reverted its IP MTU to the default 1500 bytes, which we confirmed is what R2 is using.

Now, we need to verify if this change has fixed the OSPF adjacency. OSPF should rapidly try to re-establish the full adjacency once the MTU mismatch is resolved.

My next step is to immediately check the OSPF neighbor status on R1.

Please issue the following command on R1:

show ip ospf neighbor

I’m expecting to see the neighbor state for 192.168.1.2 (R2) transition to FULL/DR or FULL/BDR. If it does, our OSPF routing problem is solved.

Yes! Success!

The show ip ospf neighbor command on R1 now shows the neighbor 192.168.1.2 (R2) in the FULL/DR state. This is exactly what we wanted to see.

This confirms that the OSPF adjacency has successfully formed between R1 and R2. The MTU mismatch was indeed the culprit. With the OSPF adjacency FULL, the routers are now exchanging routing information.

To fully confirm that “everything is down again” is resolved, we should now verify the routing tables and end-to-end communication to the Loopback interfaces, just as we did before.

My final verification steps are:

- Check R1’s Routing Table: Ensure R1 has re-learned the route to R2’s Loopback0.

- Check R2’s Routing Table: Ensure R2 has re-learned the route to R1’s Loopback0.

- End-to-End Ping: Perform a ping from R1’s Loopback0 to R2’s Loopback0 to confirm full IP reachability.

Let’s start by checking R1’s routing table.

Please issue the following command on R1:

show ip route

I’ll be looking for the 192.168.1.2 entry learned via OSPF.

O 192.168.1.2 [110/2] via 10.1.2.2, 00:01:10, GigabitEthernet1

The “O” code indicates it’s an OSPF learned route, and it’s pointing to the correct next hop (R2’s GigabitEthernet1 IP). The timestamp 00:01:10 shows it’s a recently learned and active route.

This confirms that R1 is now correctly receiving routing information from R2 via OSPF.

Now, we just need to quickly confirm that R2’s routing table also looks good, and then we’ll do our final end-to-end ping.

Please get CLI access to R2 and issue the following command:

show ip route

I’ll be looking for the OSPF route to 192.168.1.1 (R1’s Loopback0).

Perfect!

R2’s routing table also shows the OSPF route to 192.168.1.1 (R1’s Loopback0):

O 192.168.1.1 [110/2] via 10.1.2.1, 00:01:30, GigabitEthernet1

This confirms that two-way routing is fully re-established. Both routers have learned each other’s remote Loopback networks via OSPF.

Now for the final, definitive test of end-to-end communication. We’ll perform the same ping as before.

Please get CLI access to R1 and issue the following command:

ping 192.168.1.2 source Loopback0

If this ping is successful, then the communication between the two routers, including routing for their internal networks, is fully restored.

ChatGPT fixing OSPF

the fix worked, but they report they changed something and now everything is down again

Understood. We’ll treat this as a fresh failure—this time, with the added knowledge that the network was working moments ago, and something recently changed.

Let’s proceed methodically, assuming nothing. First, I want to verify what “everything is down” means in practice. We’ll check Layer 1–3 status again.

Please go to R1 and run:

show ip interface briefshow ip routeshow ip ospf neighbor

And do the same three commands on R2.

These will show:

- Whether interfaces are still up.

- Whether routes are still being exchanged.

- Whether OSPF adjacency is still active.

Let’s collect that and reassess.

🔍 Problem Detected: MTU Mismatch

From the outputs you shared:

- R1’s interface GigabitEthernet1 has an MTU of 1500 bytes

- R2’s interface GigabitEthernet1 has an MTU of 1400 bytes

This mismatch is causing the OSPF neighbor relationship to get stuck in the EXSTART state. OSPF checks the MTU when exchanging DBD (Database Description) packets — if the MTU values don’t match, it won’t proceed to FULL state.

✅ Fix Options

Option 1: Ignore MTU in OSPF (Quick Fix)

Use this if changing the actual MTU is not ideal or possible.

On both routers, apply:

conf t

interface GigabitEthernet1

ip ospf mtu-ignore

end

Then check with:

show ip ospf neighbor

You should see the state move to FULL after a few seconds.

Option 2: Match the MTU (Preferred for long-term)

If both interfaces can support the same MTU, set R2 to 1500 to match R1:

conf t

interface GigabitEthernet1

mtu 1500

endYou may need to shut/no shut the interface or reboot for the change to take effect fully.

✅ Recommendation

Use ip ospf mtu-ignore as the immediate fix — it’s safe and widely used for this kind of issue.

Let me know if you’d like a copy-paste config snippet for both routers.

Conclusions

Gemini takes a longer approach to fix, and almost got confused, but was able to provide a reasonable solution. ChatGPT took a straightforward approach but provided a fix that included ignoring MTU, which is something we certainly don’t want on production scenarios.

What do you think?

+1 (855) 645-7684

+1 (855) 645-7684